Share this article and save a life!

The History of AI:

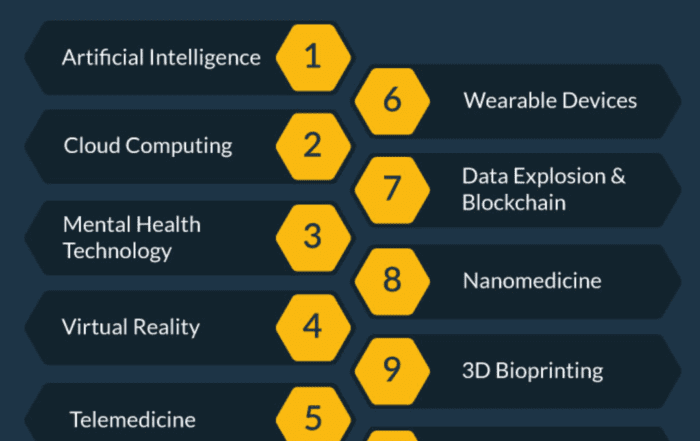

The history of AI in healthcare dates back to the 1950s when researchers began exploring the use of computers to diagnose medical conditions. In the decades that followed, AI technologies such as machine learning and natural language processing were developed, and these technologies have been applied to a variety of healthcare tasks, including radiology.

- 1940-1960: Birth of AI in the wake of cybernetics

- 1980-1990: Expert systems

- Since 2010: a new bloom based on massive data and new computing power

Artificial intelligence (AI) has the potential to revolutionize the healthcare industry. By automating certain tasks and providing doctors with more accurate and timely information, AI can help improve patient outcomes and reduce healthcare costs. However, despite its potential benefits, AI has not yet been widely adopted in the Medicare program, specifically in the field of radiology.

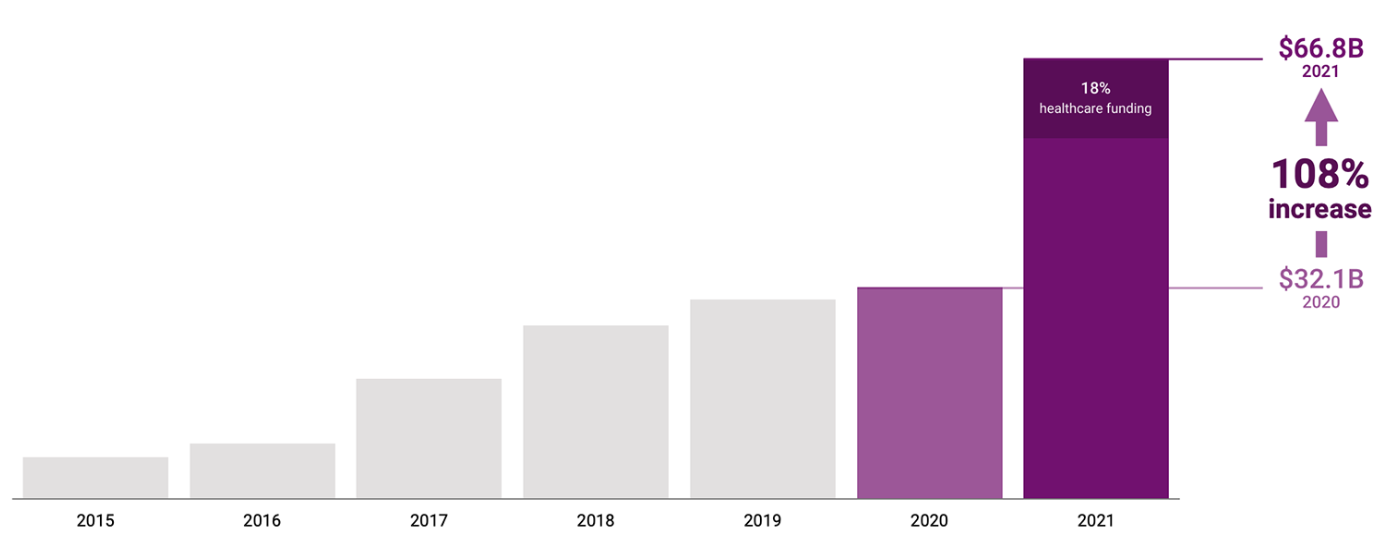

Fast forward to today from an investment opportunity

Investment in artificial intelligence was up 108% year-over-year in 2021, with $66.8 billion in global funding for startups. Healthcare AI accounted for nearly one-fifth of total funding, CB Insights reports with funding doubling in 2021.

Why has AI not been successful… A few reasons

- One of the reasons why AI has not been successful in Medicare is that it is a complex and highly regulated field. Developing AI algorithms for medical applications requires a deep understanding of both computer science and medicine, and the process is further complicated by the need to comply with various regulations and standards. This has made it difficult for AI researchers to develop and commercialize their products.

- AI has not been successful in Medicare is that there is still a lack of evidence to support its use in radiology. While some studies have shown that AI algorithms can outperform human doctors in certain tasks, such as identifying certain types of cancer on medical images, these studies are often small and not widely replicated. As a result, many doctors remain skeptical about the benefits of AI and are hesitant to adopt it in their practice.

- Medicare has not been successful in implementing AI in radiology is that there are currently no incentives for health providers to use it. Incentivizing health providers to use AI would require changes to the current system of reimbursement, such as the creation of new Current Procedural Terminology (CPT) codes that pay radiologists for using AI. Fortunately, this is now changing with CMS introducing new CPT codes for Lung Cancer to provide a second opinion of the malignancy risk score for a nodule, something that OatmealHealth.com is working on with their partners at several FQHCs.

- Lack of understanding: Despite the potential benefits of AI in healthcare, many people, including healthcare professionals, are still not familiar with the technology. This lack of understanding can make it difficult to implement AI systems in healthcare settings.

- Another reason why Medicare has struggled with AI in radiology is the lack of reliable data. In order for AI algorithms to be effective, they must be trained on large amounts of high-quality data. However, many of the data sets used by Medicare and other healthcare organizations are fragmented, inconsistent, and incomplete, which makes it difficult for AI algorithms to accurately identify and diagnose medical conditions. Furthermore, there are significant privacy concerns surrounding the use of AI in healthcare, which has led to a reluctance among patients and providers to share their medical data.

- Deployment is hard. Hospitals are not set up to use AI. The most common radiology viewing software programs cannot display AI results. Most hospitals don’t have GPU machines, and sending images to the cloud can be tricky due to HIPPA regulations and security requirements. EHR and imaging data live on separate systems, so integrating the two is difficult. Despite pushes for standardization, every hospital uses different coding systems and series description conventions for medical images. Serious problems like image corruption or gross mislabeling are relatively rare (maybe 0.25%) but when they do occur AI software currently does not typically come with out-of-distribution or anomaly detection to alert the user that the result will very likely be invalid as a result. Routing the correct images to the right AI models is also an under-appreciated challenge. AI models today are only designed to work on very specific types of images, for instance, frontal chest X-ray, diffusion-weighted brain MRI, or abdominal CT with contrast. Most models also only work within a limited range of scanner settings. Due to the sloppiness of overworked technicians image DICOM metadata is a very imperfect guide to understanding what is in an image and how it was acquired, making image routing a complex and daunting task. [Until now there hasn’t been much pressure to make such metadata in an accurate standardized format, so it generally isn’t.]

- A 2020 survey of 1,427 radiologists in the US found that just a third reported using any type of AI, although 20 percent of practices planned to buy AI tools in the next one to five years.

High profile failures

“During my brief stint at the innovation arm of the University of Pittsburgh Medical Center, it was not uncommon to see companies pitching AI-powered solutions claiming to provide 99.9% accuracy. In reality, when tested on the internal hospital dataset, they almost always fell short by a large margin.” – Sandeep Konam, 2022.

Skin cancer detection with a smartphone is one of the most promising areas for AI to make an impact. However, every skin cancer detection system being tested today suffers from a bias when it comes to non-white skin. A recent study quantified this for three commercial systems. None of the systems did better than radiologists and they all exhibited significant drops in performance between light and dark skin. For two of the systems, the drops in sensitivity were around 50% across two sets of tasks (0.41 → 0.12, 0.45→ 0.25, 0.69 → 0.23, 0.71 → 0.31). The third model actually exhibited worse sensitivity for lighter skin but it also bombed completely at the operating point the vendor said to use, achieving sensitivities < 0.10 across the board.

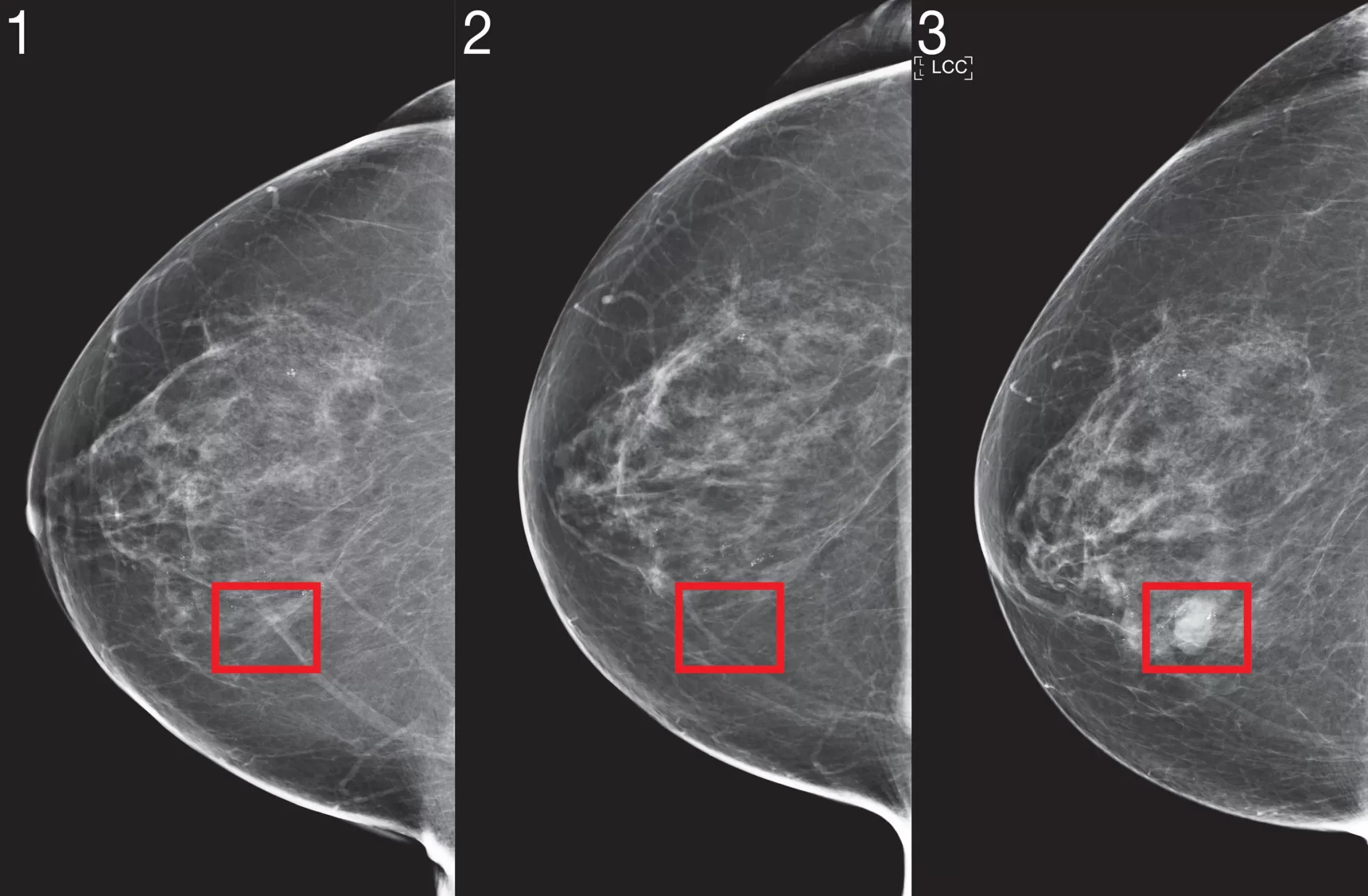

Detecting breast cancer in mammographies is probably the most researched application of computers in medical imaging, with a legacy of work going back decades (the first FDA-approved computer-aided detection system for mammography came out in 1998). A number of computer-aided detection (“CAD”) software packages for mammography were rushed to market in the mid-2010s and the numerous failings of those systems are documented in a 2018 article. 16% of breast cancers are missed by radiologists and this is the perfect application for AI, but despite intense concerted efforts stretching over 20+ years, true radiologist-level performance has still not yet been reached. One of the most recent reviews, published in September 2021, found that 34/36 (94%) of AI systems were “less accurate than a single radiologist, and all were less accurate than consensus of two or more radiologists.”3 Still, I am optimistic AI will break through here soon, with massive benefits for patients.

Epic’s sepsis model was implemented in hundreds of hospitals to monitor patients and send an alert if they were at high risk for sepsis. The model uses a combination of real-time emergency room monitoring data (heart rate, blood pressure, etc.), demographic information, and information from the patient’s medical records. Over 60 features are used in total. An external validation found very poor performance for the model (AUC 0.63 vs the advertised AUCs of 0.73 and 0.83). Out of 2,552 patients with sepsis, it only identified 33% of them were and raised a lot of false alarms in the process. STAT news reporter Casey Ross and graduate student Adam Yala carried out a forensic-style investigation of Epic’s sepsis prediction approach to illuminate how distributional shifts can send machine-learning models reeling. While they did not have access to the precise model Epic used (which is proprietary) they trained a similar model on the same features. They found that changes in ICD-10 coding standards likely contributed to a drop in the performance of Epic’s model over time, and that spurious correlation in the model’s training data also likely played a role.

It’s worth noting that all of AI’s failings in radiology — both public and private — have lowered many doctors’ trust in AI, and that lost trust may take a long time to regain.

Despite these challenges, it is clear that AI will be an important part of the future of healthcare. As the population continues to age and the demand for medical services increases, AI is expected to play a crucial role in healthcare, particularly in the field of radiology. AI algorithms can analyze medical images, such as X-rays and MRI scans, with a level of accuracy that is comparable to or even greater than human radiologists. This can help radiologists more quickly and accurately diagnose diseases and conditions, leading to better patient outcomes.

Thanks, Dan Elton for your amazing write-up

AI in Healthcare: Advances and Opportunities

Artificial intelligence (AI) has the potential to revolutionize the healthcare industry. With its ability to analyze large amounts of data, identify patterns, and make predictions, AI has the potential to improve medical care and make it more efficient and effective.

One of the most promising applications of AI in healthcare is in the area of medical diagnosis. Using machine learning algorithms, AI systems can analyze patient data and make predictions about potential health issues. This can help doctors and other healthcare professionals identify potential health problems earlier and provide more timely treatment.

For example, researchers at Stanford University have developed an AI system called Deep Learning Diagnosis (DLAD) that can analyze medical images and make predictions about the likelihood of certain diseases. In a study published in the journal Nature Medicine, the DLAD system was able to diagnose a number of diseases with accuracy levels that were on par with experienced radiologists.

Another area where AI is having a significant impact is in the management of electronic medical records (EMRs). Natural language processing (NLP) algorithms can be used to automatically extract information from EMRs, making it easier for doctors and other healthcare professionals to access and analyze patient data. This can help improve the accuracy and timeliness of medical decisions and reduce the risk of errors and omissions.

AI is also being used in the development of new drugs and treatments. Machine learning algorithms can be used to analyze vast amounts of data on the effectiveness of different drugs and treatments, helping researchers identify potential new therapies. This can speed up the drug development process and make it more efficient.

One example of this is the work being done by the pharmaceutical company Novartis. The company is using AI to analyze data from clinical trials and other sources to identify potential new treatments for cancer and other diseases. This is helping the company to identify new drug targets and develop more effective treatments.

In addition to these applications, AI is also being used in a number of other ways in the healthcare industry. For example, some hospitals are using robots to assist with surgeries, allowing doctors to perform complex procedures with greater precision and control. AI is also being used to identify potential drug interactions and improve clinical decision support systems.

Overall, the use of AI in healthcare is still in its early stages. However, the potential benefits of this technology are enormous, and as AI continues to advance, we can expect to see even more innovative and effective applications in the healthcare industry.

Share this article and save a life!

Author:

Jonathan is a seasoned executive with a proven track record in founding and scaling digital health and technology companies. He co-founded Oatmeal Health, a tech-enabled Cancer Screening as a Service for Underrepresented patients of FQHCs and health plans, starting with lung cancer. With a strong background in engineering, partnerships, and product development, Jonathan is recognized as a leader in the industry.

Govette has dedicated his professional life to enhancing the well-being of marginalized populations. To achieve this, he has established frameworks for initiatives aimed at promoting health equity among underprivileged communities.

REVOLUTIONIZING CANCER CARE

A Step-by-Step FQHC Guide to Lung Cancer Screening

The 7-page (Free) checklist to create and launch a lung cancer screening program can save your patients’ lives – proven to increase lung cancer screening rates above the ~5 percent national average.

– WHERE SHOULD I SEND YOUR FREE GUIDE? –